“Deep Speech Factorization”版本间的差异

| (相同用户的8个中间修订版本未显示) | |||

| 第52行: | 第52行: | ||

classification. An iterative training scheme can be used to implement this idea, that is, after each epoch, averaging the speaker | classification. An iterative training scheme can be used to implement this idea, that is, after each epoch, averaging the speaker | ||

features to derive speaker vectors, and then use the speaker vectors to replace the last hidden layer. The training will be then | features to derive speaker vectors, and then use the speaker vectors to replace the last hidden layer. The training will be then | ||

| − | taken as usual. The new structure is as follows: | + | taken as usual. The new structure is as follows[4]: |

[[文件:fullinfo-spk.png|500px]] | [[文件:fullinfo-spk.png|500px]] | ||

| + | |||

| + | |||

| + | =Speech factorization= | ||

| + | |||

| + | |||

| + | The short-time property is a very nice thing, which tells us it is possible to factorize speech signals. By factorization, we can achieve significant benefits: | ||

| + | |||

| + | A. Individual tasks can be largely improved, as unrelated factors have been removed. | ||

| + | |||

| + | B. Factors that are disturbs now becomes valuables things, leading to conditional training and collaborative training [5]. | ||

| + | |||

| + | C. Once the factors have been separated, single factors can be manipulated, and reassemble these factors can change the signal according to the need. | ||

| + | |||

| + | D. It is a new speech coding scheme that leverage knowledge learned from large data. | ||

| + | |||

| + | |||

| + | Traditional factorization methods are based on probabilistic models and maximum likelihood learning. For example, in JFA, a linear Gaussian is assumed | ||

| + | for speaker and channel, and then a ML estimation is applied to estimate the loading matrices of each factor, based on a long duration of speech. Almost | ||

| + | all these factorizations share these features: shallow, linear, Gaussian, long-term segments. | ||

| + | |||

| + | We are more interested in factorization on frame-level, and plays not much assumption on how the factors are mixed. A cascaded factorization approach has been | ||

| + | proposed[6]. The basic idea is to factorize significant factors first,and then conditioned on the factors that have been derived. The architecture is as | ||

| + | follows, where we factorized speech signals into three factors: linguistic content, speaker trait, emotion. When factorizing each factor, supervised | ||

| + | learning is used. Note by this architecture, databases with different target labels can be used in a complementary way, which is different from | ||

| + | previously joint training approach that needs full-labelled data. | ||

| + | |||

| + | |||

| + | [[文件:deepfact.png|500px]] | ||

| + | |||

| + | |||

| + | =Speech reconstruction= | ||

| + | |||

| + | To verify the factorization, we can reconstruct the speech signal from the factors. The reconstruction is simply based on a DNN, | ||

| + | as shown below. | ||

| + | Each factor passes a unique deep neural net, the output of the three DNNs are added together, and compared with the target, | ||

| + | which is the logarithm of the spectrum of the original signal. This means that the output of the DNNs of the three factors are | ||

| + | assumed to be convolved together to produce the original speech. | ||

| + | |||

| + | [[文件:fact-recover-dnn.png|500px]] | ||

| + | |||

| + | Note that the factors are learned from Fbanks, by which some speech information | ||

| + | has been lost, however the recovery is rather successfull. | ||

| + | |||

| + | |||

| + | ==View the reconstruction== | ||

| + | |||

| + | [[文件:fact-recover.png|500px]] | ||

| + | |||

| + | |||

| + | More recovery examples can be found [[dsf-examples|here]]. | ||

| + | |||

| + | |||

| + | ==Listen to the reconstruction== | ||

| + | |||

| + | We can listen to the wave for each factor, by using the original phase. | ||

| + | |||

| + | |||

| + | Original speech: [http://wangd.cslt.org/research/cdf/demo/CHEAVD_1_1_E02_001_worried.wav] | ||

| + | |||

| + | Linguistic factor: [http://wangd.cslt.org/research/cdf/demo/phone.wav] | ||

| + | |||

| + | Speaker factor: [http://wangd.cslt.org/research/cdf/demo/speaker.wav] | ||

| + | |||

| + | Emotion factor: [http://wangd.cslt.org/research/cdf/demo/emotion.wav] | ||

| + | |||

| + | Liguistic+ Speaker + Emotion: [http://wangd.cslt.org/research/cdf/demo/recovery.wav] | ||

| + | |||

| + | |||

| + | =Research directions= | ||

| + | |||

| + | * Adversarial factor learning | ||

| + | * Phone-aware multiple d-vector back-end for speaker recognition | ||

| + | * TTS adaptation based on speaker factors | ||

| 第64行: | 第137行: | ||

[2] Ehsan Variani, Xin Lei, Erik McDermott, Ignacio Lopez Moreno, and Javier Gonzalez-Dominguez, “Deep neural networks for small footprint text-dependent speaker | [2] Ehsan Variani, Xin Lei, Erik McDermott, Ignacio Lopez Moreno, and Javier Gonzalez-Dominguez, “Deep neural networks for small footprint text-dependent speaker | ||

verification,”, ICASSP 2014. | verification,”, ICASSP 2014. | ||

| + | |||

| + | [3] Lantian Li, Dong Wang, Yixiang Chen, Ying Shing, Zhiyuan Tang, http://wangd.cslt.org/public/pdf/spkfact.pdf | ||

| + | |||

| + | [4] Lantian Li, Zhiyuan Tang, Dong Wang, FULL-INFO TRAINING FOR DEEP SPEAKER FEATURE LEARNING, http://wangd.cslt.org/public/pdf/mlspk.pdf | ||

| + | |||

| + | [5] Zhiyuan Thang, Lantian Li, Dong Wang, Ravi Vipperla "Collaborative Joint Training with Multi-task Recurrent Model for Speech and Speaker Recognition", IEEE Trans. on Audio, Speech and Language Processing, vol. 25, no.3, March 2017. | ||

| + | |||

| + | [6] Dong Wang,Lantian Li,Ying Shi,Yixiang Chen,Zhiyuan Tang., "Deep Factorization for Speech Signal", https://arxiv.org/abs/1706.01777 | ||

2017年10月30日 (一) 01:50的最后版本

目录

Project name

Deep Factorization for Speech Signals

Project members

Dong Wang, Lantian Li, Zhiyuan Tang

Introduction

Various informative factors mixed in speech signals, leading to great difficulty when decoding any of the factors. Here informative factors we mean task-oriented factors, e.g., linguistic content, speaker identity and emotion factor, rather than factors of physical models, e.g., excitation and modulation of the traditional source-filter model.

A natural idea is that, if we can factorize speech signals into individual information factors, all the speech

signal processing tasks will be greatly simplified. However, as expected, this factorization is highly difficult,

due to at least two reasons: firstly, the mechanism that various factors mixed together is far from clear to us,

the only thing we know right now is it is rather complex; secondly, if some typical factors are short-time identifiable

is also far from known, e.g., speaker traits. That is to say, we essentially do not know if speech signals are short-time

factorizable, until recently we found a deep speaker feature learning approach recently[1].

The discovery that speech signals are short-time factorizable is important, and it opens a door to a new paradigm of speech research. This project follows this direction and attempts to lay down the foundation of this new science.

Speaker feature learning

The discovery of the short-time property of speaker traits is the key step towards speech signal factorization, as the speaker trait is one of the two main factors: the other is linguistic content that we have known for a long time being short-time patterns.

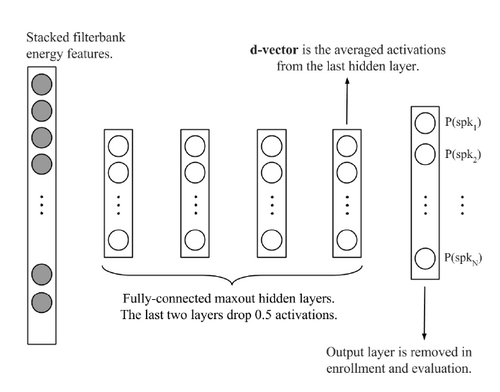

The key idea of speaker feature learning is simply based on the idea of discriminating training speakers based on short-time frames by deep neural networks (DNN), date back to 2014 by Ehsan et al.[2]. As shown below, the output of the DNN involves the training speakers, and the frame-level speaker features are read from the last hidden layer. The basic assumption here is: if the output of the last hidden layer can be used as the input feature of the last hidden layer (a software regression classifier), these features should be speaker discriminative.

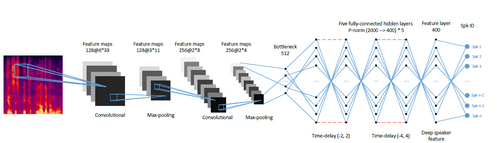

However, the vanilla structure of Ehsan et al. performs rather poor compared to the i-vector counterpart. One reason is that the simple back-end scoring is based on average to derive the utterance-based representations (called d-vectors) , but another reason is the vanilla DNN structure that does not consider much of the context and pattern learning. We therefore proposed a CT-DNN model that can learn stronger speaker features. The structure is shown below[1]:

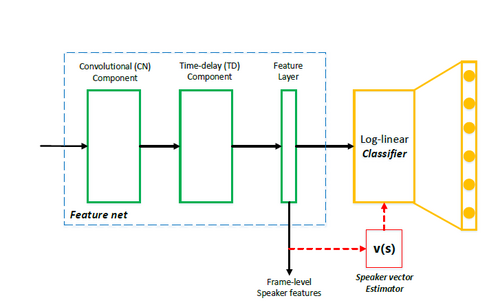

Recently, we found that an 'all-info' training is effective for learning features. Looking back to DNN and CT-DNN, although the features

read from last hidden layer are discriminative, but not 'all discriminative', because some discriminant info can be also impelemented

in the last affine layer. A better strategy is let the feature generation net (feature net) learns all the things of discrimination.

To achieve this, we discarded the parametric classifier (the last affine layer) and use the simple cosine distance to conduct the

classification. An iterative training scheme can be used to implement this idea, that is, after each epoch, averaging the speaker

features to derive speaker vectors, and then use the speaker vectors to replace the last hidden layer. The training will be then

taken as usual. The new structure is as follows[4]:

Speech factorization

The short-time property is a very nice thing, which tells us it is possible to factorize speech signals. By factorization, we can achieve significant benefits:

A. Individual tasks can be largely improved, as unrelated factors have been removed.

B. Factors that are disturbs now becomes valuables things, leading to conditional training and collaborative training [5].

C. Once the factors have been separated, single factors can be manipulated, and reassemble these factors can change the signal according to the need.

D. It is a new speech coding scheme that leverage knowledge learned from large data.

Traditional factorization methods are based on probabilistic models and maximum likelihood learning. For example, in JFA, a linear Gaussian is assumed

for speaker and channel, and then a ML estimation is applied to estimate the loading matrices of each factor, based on a long duration of speech. Almost

all these factorizations share these features: shallow, linear, Gaussian, long-term segments.

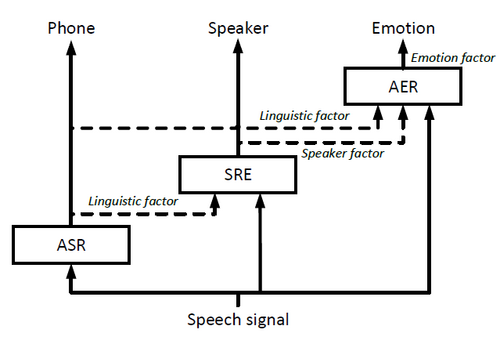

We are more interested in factorization on frame-level, and plays not much assumption on how the factors are mixed. A cascaded factorization approach has been proposed[6]. The basic idea is to factorize significant factors first,and then conditioned on the factors that have been derived. The architecture is as follows, where we factorized speech signals into three factors: linguistic content, speaker trait, emotion. When factorizing each factor, supervised learning is used. Note by this architecture, databases with different target labels can be used in a complementary way, which is different from previously joint training approach that needs full-labelled data.

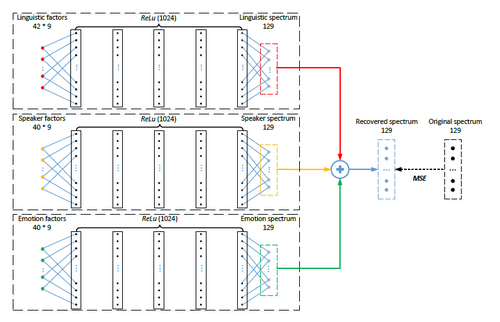

Speech reconstruction

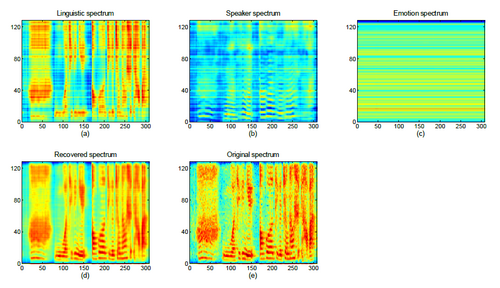

To verify the factorization, we can reconstruct the speech signal from the factors. The reconstruction is simply based on a DNN, as shown below. Each factor passes a unique deep neural net, the output of the three DNNs are added together, and compared with the target, which is the logarithm of the spectrum of the original signal. This means that the output of the DNNs of the three factors are assumed to be convolved together to produce the original speech.

Note that the factors are learned from Fbanks, by which some speech information has been lost, however the recovery is rather successfull.

View the reconstruction

More recovery examples can be found here.

Listen to the reconstruction

We can listen to the wave for each factor, by using the original phase.

Original speech: [1]

Linguistic factor: [2]

Speaker factor: [3]

Emotion factor: [4]

Liguistic+ Speaker + Emotion: [5]

Research directions

- Adversarial factor learning

- Phone-aware multiple d-vector back-end for speaker recognition

- TTS adaptation based on speaker factors

Reference

[1] Lantian Li, Yixiang Chen, Ying Shi, Zhiyuan Tang, and Dong Wang, “Deep speaker feature learning for text-independent speaker verification,”, Interspeech 2017.

[2] Ehsan Variani, Xin Lei, Erik McDermott, Ignacio Lopez Moreno, and Javier Gonzalez-Dominguez, “Deep neural networks for small footprint text-dependent speaker verification,”, ICASSP 2014.

[3] Lantian Li, Dong Wang, Yixiang Chen, Ying Shing, Zhiyuan Tang, http://wangd.cslt.org/public/pdf/spkfact.pdf

[4] Lantian Li, Zhiyuan Tang, Dong Wang, FULL-INFO TRAINING FOR DEEP SPEAKER FEATURE LEARNING, http://wangd.cslt.org/public/pdf/mlspk.pdf

[5] Zhiyuan Thang, Lantian Li, Dong Wang, Ravi Vipperla "Collaborative Joint Training with Multi-task Recurrent Model for Speech and Speaker Recognition", IEEE Trans. on Audio, Speech and Language Processing, vol. 25, no.3, March 2017.

[6] Dong Wang,Lantian Li,Ying Shi,Yixiang Chen,Zhiyuan Tang., "Deep Factorization for Speech Signal", https://arxiv.org/abs/1706.01777