“Phonetic Temporal Neural LID”版本间的差异

(→Project name) |

|||

| 第1行: | 第1行: | ||

=Project name= | =Project name= | ||

| − | Phonetic Temporal Neural Model for Language Identification | + | Phonetic Temporal Neural (PTN) Model for Language Identification |

=Project members= | =Project members= | ||

2017年10月31日 (二) 11:05的版本

目录

Project name

Phonetic Temporal Neural (PTN) Model for Language Identification

Project members

Dong Wang, Zhiyuan Tang, Lantian Li, Ying Shi

Introduction

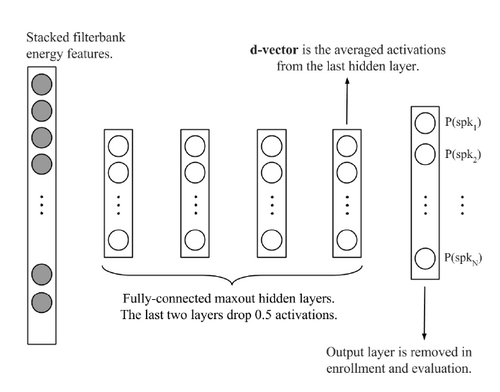

Deep neural models, particularly the LSTM-RNN model, have shown great potential for language identification (LID). However, the use of phonetic information has been largely overlooked by most existing neural LID methods, although this information has been used very successfully in conventional phonetic LID systems. We present a phonetic temporal neural model for LID, which is an LSTM-RNN LID system that accepts phonetic features produced by a phone-discriminative DNN as the input, rather than raw acoustic features. This new model is similar to traditional phonetic LID methods, but the phonetic knowledge here is much richer: it is at the frame level and involves compacted information of all phones. Our experiments conducted on the Babel database and the AP16-OLR database demonstrate that the temporal phonetic neural approach is very effective, and significantly outperforms existing acoustic neural models. It also outperforms the conventional i-vector approach on short utterances and in noisy conditions.

Phonetic feature

Research directions

- Adversarial factor learning

- Phone-aware multiple d-vector back-end for speaker recognition

- TTS adaptation based on speaker factors